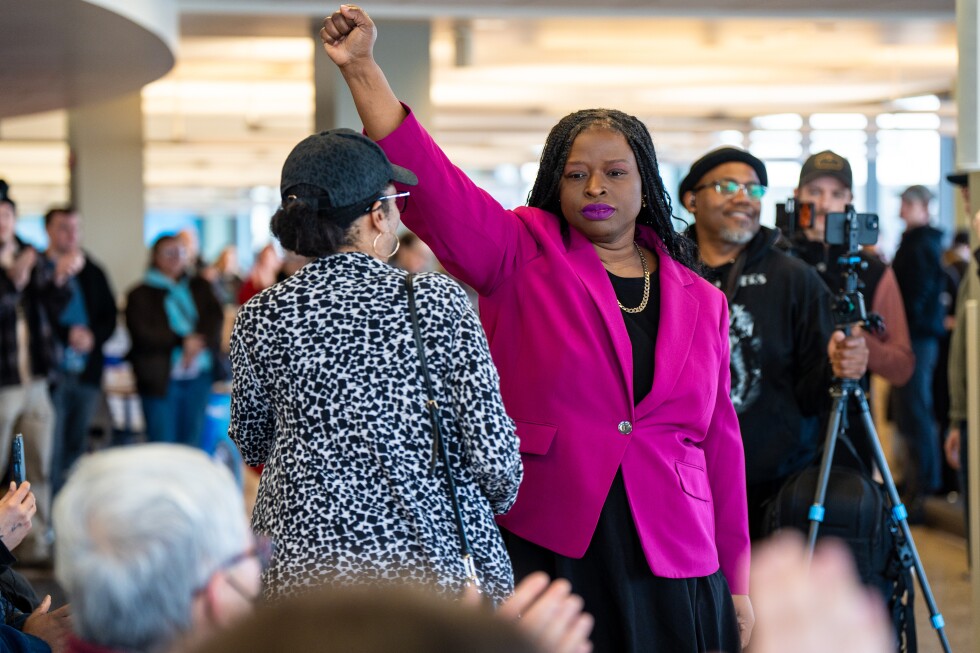

The digital information ecosystem is facing a critical stress test as official political apparatuses increasingly embrace generative AI for content creation. While political actors have long utilized visual satire and memes, the recent dissemination of a hyper-realistic, AI-altered image showing civil rights attorney Nekima Levy Armstrong in tears after an arrest signals a troubling pivot in information warfare strategy.

This incident, where an image was manipulated to convey a specific emotional narrative and shared across official accounts, transcends playful digital commentary. Misinformation experts view this as a calculated move to blur the lines between documented reality and synthetic fabrication. The defense—that such content is merely a continuation of previous 'meme' culture—is seen by analysts as a thinly veiled attempt to deflect accountability for deploying sophisticated manipulated media.

Zach Henry, a communications consultant, notes that this tactic is highly effective for engaging the 'terminally online' base, who recognize the layered context of a meme. However, for the broader public, the photorealistic quality of the AI manipulation bypasses critical awareness, forcing less digitally native audiences to question the veracity of what they see, often prompting engagement through confusion or outrage.

Academics are sounding alarms over the institutional damage. Professor Michael A. Spikes emphasizes that when government entities—the supposed arbiters of verifiable information—actively create and distribute synthetic content, the foundational trust required for civic function deteriorates. This behavior risks exacerbating existing crises of confidence in institutions like the press and academia.

Ramesh Srinivasan of UCLA highlights that AI tools are not just introducing false content; they are fundamentally challenging the public's ability to anchor themselves to a shared understanding of truth or evidence. The normalization of unlabeled synthetic content by powerful actors grants implicit permission for others to flood the zone with even more extreme, algorithmically favored fabrications.

This phenomenon is not isolated to political messaging. The context surrounding recent ICE enforcement actions has already seen a proliferation of AI-generated videos depicting fabricated confrontations, illustrating how synthetic content can quickly shape narratives around sensitive public safety events. Jeremy Carrasco, a media literacy specialist, notes that while some viewers might recognize the fakes, the majority struggle to discern authenticity, especially when stakes are high.

As the technology matures, the challenge deepens. While technical solutions like cryptographic watermarking (e.g., C2PA standards) offer a potential path forward for content provenance, widespread adoption remains distant. The current trajectory suggests that the intentional deployment of high-fidelity synthetic media by influential entities will remain a defining, and deeply corrosive, feature of the digital public square for the foreseeable future. (Source: Adapted from AP News reporting)