In a development that signals a profound shift in the intersection of governance and generative AI, US federal agencies have reportedly utilized digitally manipulated imagery to frame political opposition. The incident centers on the arrest of activists following a protest at a Minnesota church, where demonstrators targeted a pastor linked to Immigration and Customs Enforcement (ICE).

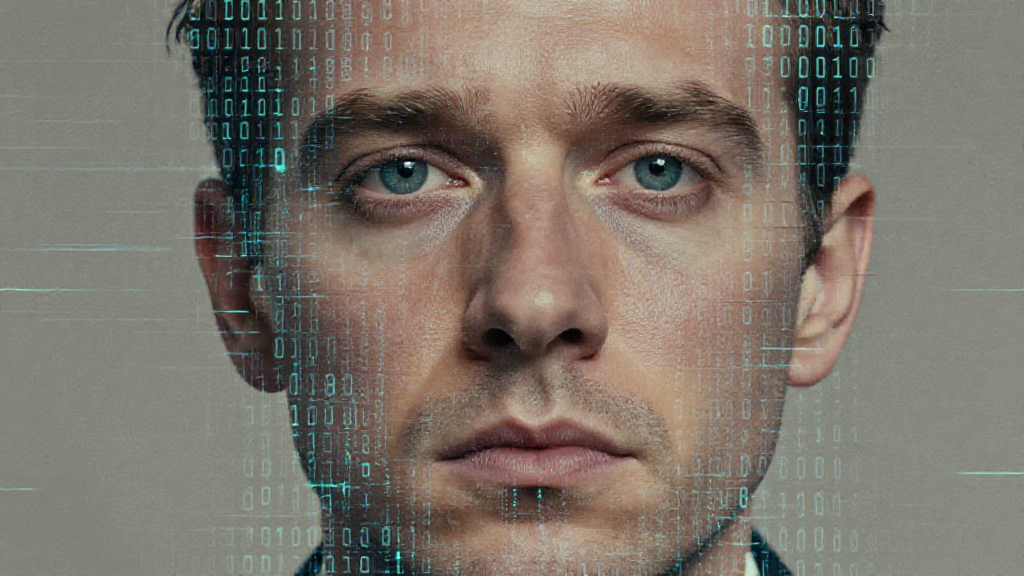

While the Department of Justice confirmed arrests—citing potential FACE Act violations against individuals like civil-rights attorney Nekima Levy Armstrong—the subsequent dissemination of visual evidence took a distinctly futuristic and alarming turn. High-ranking officials amplified an image, purportedly of Armstrong in custody, that had been subtly but clearly altered by artificial intelligence to depict her openly sobbing.

This move transcends traditional photo retouching. Homeland Security Secretary Kristi Noem shared what appeared to be the original, less emotionally charged photograph, confirming the manipulation. When pressed, a White House spokesperson reportedly offered a chilling justification: “Enforcement of the law will continue. The memes will continue.” This statement suggests a deliberate, policy-driven embrace of synthetic media for political messaging.

This is not an isolated event. Previous instances have shown the administration employing AI-generated content, from animated depictions of migrant deportations to satirical imagery targeting detention facilities. However, applying this technology directly to official documentation surrounding an arrest marks a critical inflection point in digital governance.

The underlying protest itself addressed serious community concerns, specifically the presence of an ICE field office director as a pastor at Cities Church, linked to controversial agency tactics and recent fatal encounters. Activists demanded accountability, yet the official response focused heavily on visual character assassination via deepfake technology.

As Xiandai has consistently tracked, the proliferation of accessible generative AI tools presents a dual challenge: democratizing creativity while simultaneously weaponizing disinformation. When governmental bodies begin leveraging synthetic media not just for communication, but for shaping the immediate perception of legal action, the integrity of public documentation faces unprecedented strain. The question is no longer if AI will influence politics, but how rapidly adversarial actors—even state actors—will normalize the synthetic reality.

Source attribution: Based on reporting from nymag.com and associated outlets.